Automated

test equipment (ATE) is computer-controlled test and measurement

equipment that allows for testing with minimal human interaction. The

tested devices are referred to as a device under test (DUT). The

advantages of this kind of testing include reducing testing time,

repeatability, and cost efficiency in high volume. The chief

disadvantages are the upfront costs of programming and setup.

Automated test equipment can test printed circuit boards, interconnections, and verifications. They are commonly used in wireless communication and radar. Simple ATEs include volt-ohm meters that measure resistance and voltages in PCs; complex ATE systems have several mechanisms that automatically run high-level electronic diagnostics.

ATE is used to quickly confirm whether a DUT works and to find defects. When the first out-of-tolerance value is detected, the testing stops and the device fails.

When testing packaged parts or directly on the silicon wafer, a handler is used to place the device on a customized interface board and silicon wafers are tested directly with high precision probes.

Linear or mixed signal equipment tests components such as analog-to-digital converters (ADCs), digital-to-analog converters (DACs), comparators, track-and-hold amplifiers, and video products. These components incorporate features such as, audio interfaces, signal processing functions, and high-speed transceivers.

Passive component ATEs test passive components including capacitors, resistors, inductors, etc. Typically, testing is done by the application of a test current.

Discrete ATEs test active components including transistors, diodes, MOSFETs, regulators, TRIACS, Zeners, SCRs, and JFETs.

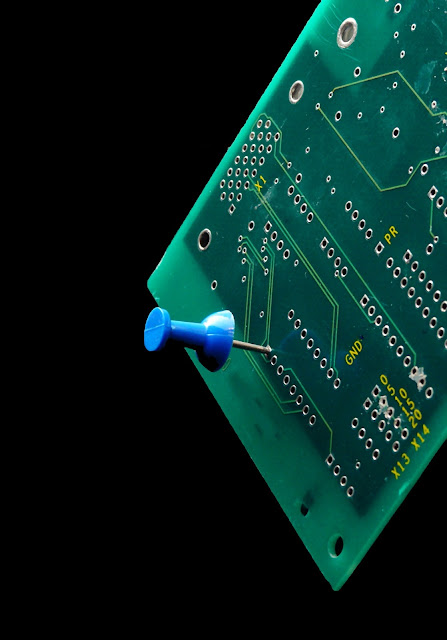

Automated Test Equipment imageManufacturing defect analyzers (MDAs) detect manufacturing defects, such as shorts and missing components, but can't test digital ICs as they test with the DUT powered down (cold). As a result, they assume the ICs are functional. MDAs are much less expensive than other test options and are also referred to as analog circuit testers.

In-circuit analyzers test components that are part of a board assembly. The components under test are "in a circuit." The DUT is powered up (hot). In-circuit testers are very powerful but are limited due to the high density of tracks and components in most current designs. The pins for contact must be placed very accurately in order to make good contact. They are also referred to as digital circuit testers or ICT.

A functional test simulates an operating environment and tests a board against its functional specification. Functional automatic test equipment (FATE) unpopular due to the equipment not being able to keep up with the increasing speed of boards. This causes a lag between the board under test and the manufacturing process. There are several types of functional test equipment and they may also be referred to as emulators.

Cable and harness testers are used to detect opens (missing connections), shorts (open connections) and miswires (wrong pins) on cable harnesses, distribution panels, wiring looms, flexible circuits, and membrane switch panels with commonly-used connector configurations. Other tests performed by automated test equipment include resistance and hipot tests.

Bare board automated test equipment is used to detect the completeness of a PCB circuit before assembly and wave solder.

Automated test equipment can test printed circuit boards, interconnections, and verifications. They are commonly used in wireless communication and radar. Simple ATEs include volt-ohm meters that measure resistance and voltages in PCs; complex ATE systems have several mechanisms that automatically run high-level electronic diagnostics.

ATE is used to quickly confirm whether a DUT works and to find defects. When the first out-of-tolerance value is detected, the testing stops and the device fails.

Semiconductor Testing

For ATEs that test semiconductors, the architecture consists of a master controller (a computer) that synchronizes one or more sources and capture instruments, such as an industrial PC or mass interconnect. The DUT is physically connected to the ATE by a machine called a handler, or prober, and through a customized Interface Test Adapter (ITA) that adapts the ATE's resources to the DUT.When testing packaged parts or directly on the silicon wafer, a handler is used to place the device on a customized interface board and silicon wafers are tested directly with high precision probes.

Test Types

Logic Testing

Logic test systems are designed to test microprocessors, gate arrays, ASICs and other logic devices.Linear or mixed signal equipment tests components such as analog-to-digital converters (ADCs), digital-to-analog converters (DACs), comparators, track-and-hold amplifiers, and video products. These components incorporate features such as, audio interfaces, signal processing functions, and high-speed transceivers.

Passive component ATEs test passive components including capacitors, resistors, inductors, etc. Typically, testing is done by the application of a test current.

Discrete ATEs test active components including transistors, diodes, MOSFETs, regulators, TRIACS, Zeners, SCRs, and JFETs.

Printed Circuit Board Testing

Printed circuit board testers include manufacturing defect analyzers, in-circuit testers, and functional analyzers.Automated Test Equipment imageManufacturing defect analyzers (MDAs) detect manufacturing defects, such as shorts and missing components, but can't test digital ICs as they test with the DUT powered down (cold). As a result, they assume the ICs are functional. MDAs are much less expensive than other test options and are also referred to as analog circuit testers.

In-circuit analyzers test components that are part of a board assembly. The components under test are "in a circuit." The DUT is powered up (hot). In-circuit testers are very powerful but are limited due to the high density of tracks and components in most current designs. The pins for contact must be placed very accurately in order to make good contact. They are also referred to as digital circuit testers or ICT.

A functional test simulates an operating environment and tests a board against its functional specification. Functional automatic test equipment (FATE) unpopular due to the equipment not being able to keep up with the increasing speed of boards. This causes a lag between the board under test and the manufacturing process. There are several types of functional test equipment and they may also be referred to as emulators.

Interconnection and Verification Testing

Test types for interconnection and verification include cable and harness testers and bare-board testers.Cable and harness testers are used to detect opens (missing connections), shorts (open connections) and miswires (wrong pins) on cable harnesses, distribution panels, wiring looms, flexible circuits, and membrane switch panels with commonly-used connector configurations. Other tests performed by automated test equipment include resistance and hipot tests.

Bare board automated test equipment is used to detect the completeness of a PCB circuit before assembly and wave solder.